Dramatically increase processing speed, memory bandwidth, and power efficiency

🟦 Meta announces its second in-house AI inference accelerator “MTIA v2”!

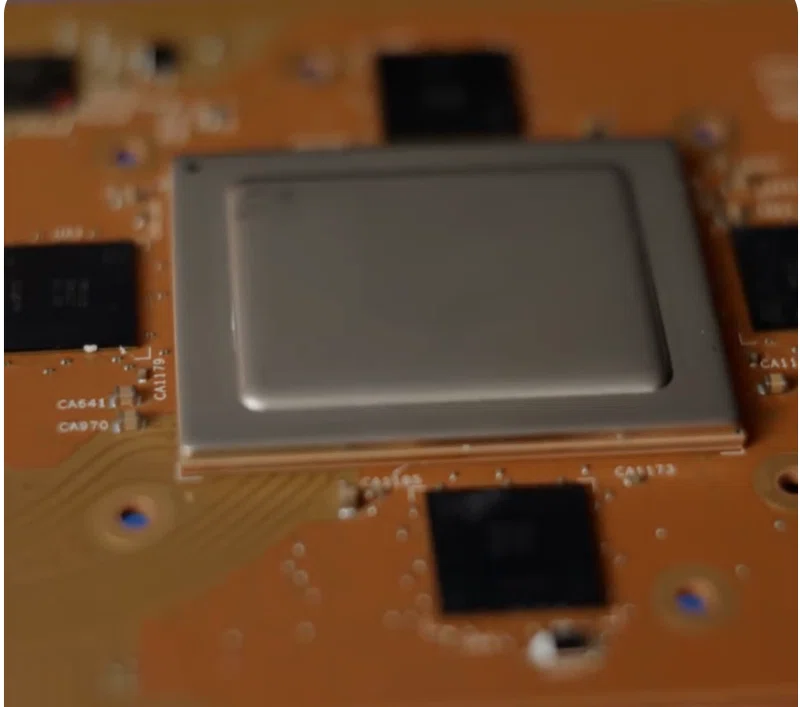

Meta has announced MTIA v2, the second installment of its AI inference accelerator MTIA. Meta will enhance its ability to recommend ads and content to be displayed on social networking sites such as Facebook and Instagram.

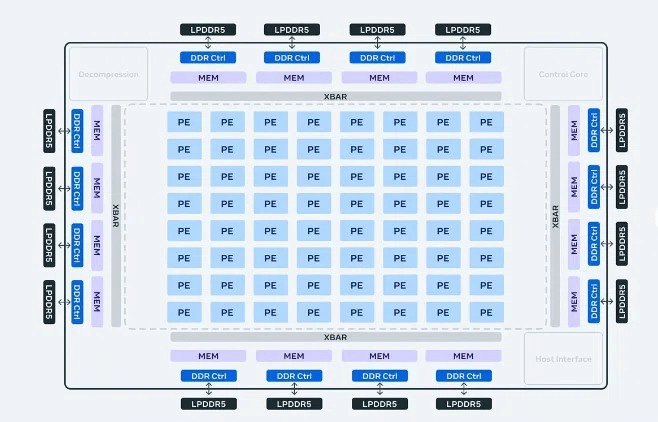

MTIA v2 significantly improves processing speed, memory bandwidth, and power efficiency through the following innovations:

- 5nm process: The miniaturization of MTIA v1’s 7nm process to a 5nm process increases transistor density and improves power efficiency.

- New Architecture: MTIA v2 uses a new architecture to increase computing power and memory access efficiency.

- High-performance memory: MTIA v2 has increased memory bandwidth by employing HBM2 memory

🟦MTIA v2 Data Center Deployment and AI Capabilities

Meta has already implemented MTIA in some of its data centers and is actively incorporating AI capabilities into its products. This aims to secure the computational infrastructure that is essential for the development and use of AI, and to further develop AI technology. Meta plans to continue to strengthen its in-house development of semiconductors.

MTIA v2 is three times more efficient than its predecessor in some inference processing. MTIA v2 delivers comparable performance compared to NVIDIA A100 and Google TPU v4 at a lower power source.

🟦Summary

Meta has announced MTIA v2, an AI inference accelerator. MTIA v2 will continue to strengthen its in-house semiconductor development to ensure that MTIA v2 provides the computational infrastructure essential for the development and use of AI.

We hope that MTIA v2 will be able to effectively regulate fraudulent advertising.